Robots.txt and Fake “Files”

In the process of checking Caddy logs, I realised two things.

Firstly, I was blocking Google crawlers because they were hitting resources such as /.git and /.env (why crawl them?!!) and secondly, that various bots are trying to steal things from my sites.

Since all sites are behind Caddy and fall into category a) crawl but don’t annoy or b) don’t bother crawling, I decided to address the robots.txt there (and hopefully stop Fail2Banning Googlebots).

Welcome to snippet robots.txt that are imported into my sites to either block the crawlers (and still ban the ones that don’t respect robots.txt) or to allow the crawlers and block certain resources (good crawlers will likely then not get banned automatically).

Here’s a nice snippet that can be imported into sites to do this, no files required.

(robots-block) {

@robots path /robots.txt

handle @robots {

respond 200 {

body <<EOF

User-agent: *

Disallow: /

EOF

}

}

}

(robots-restrict) {

@robots path /robots.txt

handle @robots {

respond 200 {

body <<EOF

User-agent: *

Disallow: /*.git*

Disallow: /*.env*

Disallow: /backup

Disallow: /home

Disallow: /old

Disallow: /info.php

Allow: /

EOF

}

}

}

With these changes, blocks on Googlebots seem to drop – which is a good thing.

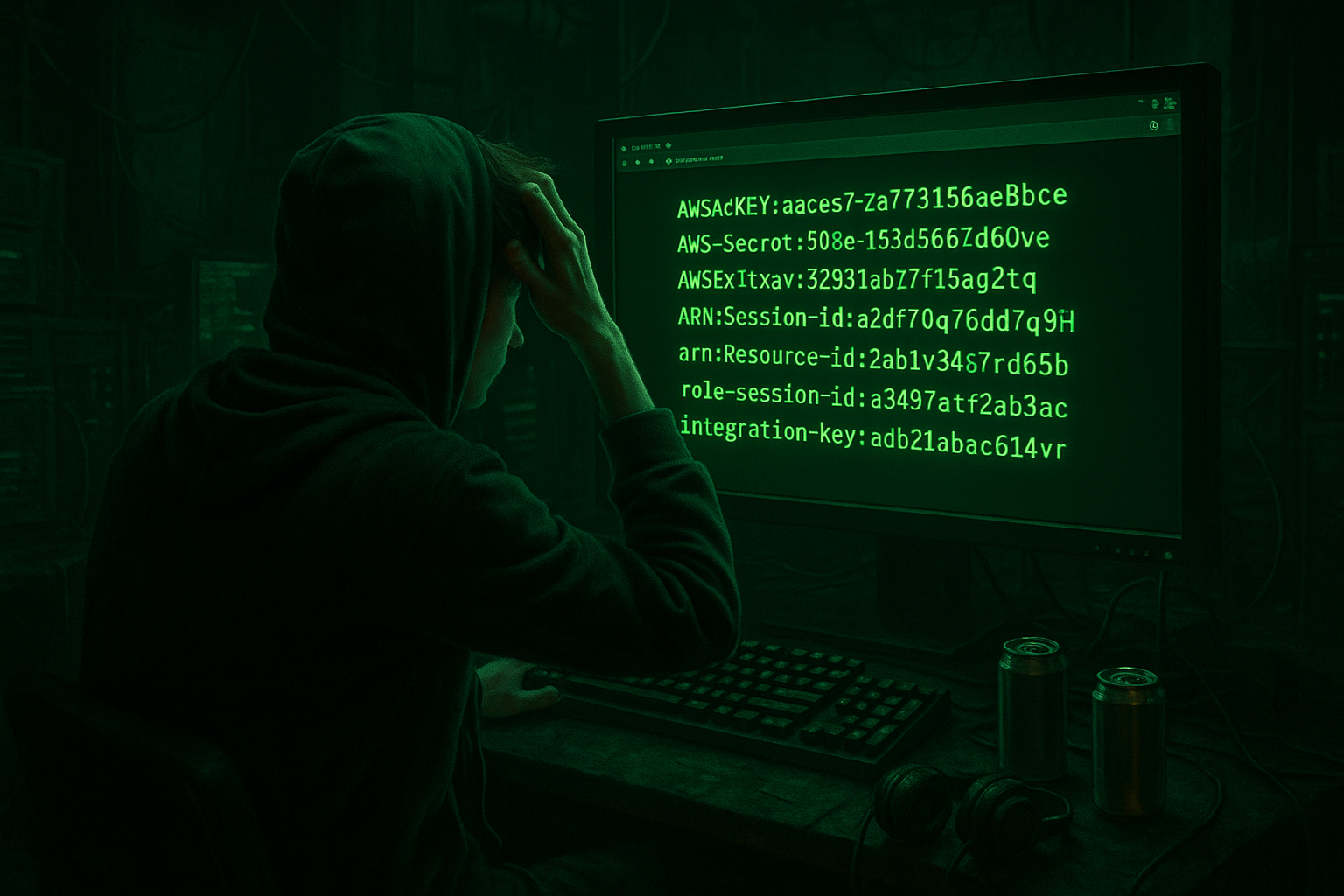

Fake Files for Credential Harvesting

Onwards and upwards to the bots that don’t care about robots.txt and deserve to steal all of the secrets that the servers contain.

Using the same approach, all hosts are configured to respond for certain files with a response, one that probably seems valid (for a while).

(fake-honeypots) {

@aws_secret path /aws-secret.yaml

handle @aws_secret {

respond 200 {

body <<EOF

aws_access_key_id: AKIA2L4V8Q9S7M3B6C1D

aws_secret_access_key: 8rT5yN2kW4pQ1zL6vB9fS3cA7mE0jH5gD2x

region: sa-east-1

EOF

}

}

@aws_yml path /aws.yml

handle @aws_yml {

respond 200 {

body <<EOF

default: &default

region: eu-west-3

access_key_id: AKIA6K1J3F9D2S8A4L7P

secret_access_key: z7V3bG2nC8xQ5lK1hF6dS4aR0wE9tY2uI5m

EOF

}

}

@aws_creds path /aws/credentials

handle @aws_creds {

respond 200 {

body <<EOFThis means that the bots trying to scrape some credentials from the web servers are being fed credentials by Caddy. No load on the web servers but handled quickly from RAM by Caddy…!!